World Models

A world model is an AI system that predicts future states of an environment given a sequence of actions. World models have made the news recently with both Google DeepMind and Meta AI (FAIR) announcing their own versions, Genie 3 and V-JEPA 2 respectively. I was inspired by earlier works on world modeling in video games, in particular DIAMOND, which contributed to me shifting away from sim2real approaches for training robots. To learn more about these systems I decided to try and train a DIAMOND-like model to control a JetBot. The original goal was to have the JetBot learn a world model and use it to move toward a Kong dog toy in its field of view. This eventually got simplified to having the JetBot use one motor to turn toward the Kong and stopping when it was centered in its view.

I kept a log while working on the project so rather than summarize what I did in detail (which your favorite LLM can probably do better), I’ll focus on what I found interesting about the project.

A lot of links in the log are probably broken due to access settings, which I haven’t gotten around to making public, but I’m sharing things now in case anyone finds it interesting or useful. If there’s any particular link(s) you’re interested in, feel free to reach out and I can update the access settings.

Building AI vs. software

Developing AI software felt very different from my past experience making other types of software. I come from a professional background in web apps and some data and analytics software engineering. “Normal” software engineering feels more straightforward in terms of knowing if a system is right for the problem and working. If things aren’t working, you can usually trace it back to an error in the code or configuration. Training a model feels more open-ended. You might have the system set up correctly, but there isn’t enough data or the right type of data. It’s possible you didn’t train for long enough or with the right hyperparameters. Maybe the system isn’t right and you should be using a different architecture. Maybe the general architecture is right and you just need to add more layers or neurons in each layer or use a different type of normalization in the layers. Or maybe there is an error the implementation. Somewhat ironically, when writing software it feels like there’s more of a gradient to follow to get from beginning to end where training a model feels like a random walk sometimes, at least for a novice like myself. I’m guessing intuition develops with experience with what to try next when things aren’t working, but right now it often feels like trying a bunch of things until something works.

AI Assistance

I started using LLMs a bit in my last project, but I leaned heavily into them for this one. Everything from brainstorming high-level plans to learning about technical concepts to implementing ideas. I started off copying and pasting code from the web interfaces of ChatGPT and Gemini, but by the end I was mainly using OpenAI’s codex web interface (and to a lesser extent Google’s Jules) to create pull requests against my repo where I’d then check out the branch, test it, and either have the agents make revisions or, less often, make them myself and then merge the changes in once I was satisfied. AI didn’t just speed up development on the project, it helped solve problems I probably wouldn’t have on my own. I’d often ask for something to be implemented and while it was generating the pull request or during model training I’d be asking ChatGPT or Gemini to help me understand whatever it was that it had just written. I did get lazier at trying to follow every line of code as the project went on. This came back to bite me when I missed a pretty simple bug where a model was expecting an index to an action and the code generated was passing an action value. Due to the open-endedness of training, as I mentioned earlier, I ended up going down different avenues of exploration until I found this bug some two months later. Overall, I’d still say AI assistance has been a huge net positive, and I plan to keep using it extensively.

Automated Reward Signal

A good example of how AI did more than just speed up development was in helping to develop the reward model. Reward is used as feedback for a robot to learn whether it is accomplishing its task. In video games its easy for the programmer to send the reward signal to the learner as it’s playing the game. One thing that puzzled me was how to efficiently do this in the real world with robots. For the JetBot task of centering the Kong in its view, the reward at each time step should be higher if the Kong was closer to the center and lower when it wasn’t.

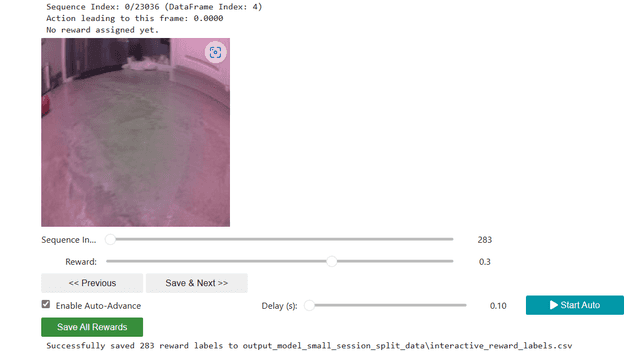

I decided to try to train a model to estimate reward and started off by making an interface to play back videos collected while the JetBot ran and assign rewards to the frames as I watched [reward labeling notebook]. I then used the labeled data to train a reward estimator, which kind of worked, but wasn’t very reliable and probably needed more data.

Manually labeling reward data didn’t seem scalable, but as I asked ChatGPT about the state of my project, it suggested using a vision-language model for reward prediction. The basic idea is to describe your goal state in a sentence and the estimated reward is how closely an image matches that description. In my case the positive prompt was ‘red Kong dog toy centered in the frame’ and the negative prompt was ‘an empty kitchen floor with no toy’. I first tried a VLM out of the box, but it didn’t perform that well. I then fine-tuned it on the data I had collected to train my initial reward estimator [clip finetuning notebook]. This ended up working much better than the model I had trained from scratch and, more importantly, it generalized to areas where I hadn’t collected data.

[clip reward estimator notebook]

I think this approach to defining rewards and tasks is going to be useful for general robot learning. One can imagine defining more complex tasks by describing not just the state captured in a single image, but what happens in an entire video clip and then having the VLM score it.

Interesting Problems

Three problems for world modeling stood out to me as I worked on this project. The first was making predictions at different time scales. The second was automating data collection for training. The third was training on data incrementally.

Video is recorded at 30 Hz when collecting data. You can downsample this when setting up the dataset used for training. I initially set the rate to be 5 Hz, but it was hard to discern movement between successive frames, which can make planning harder. Lower rates made motion more apparent and thus easier to decide whether to keep turning or not. This made me think about how human planning happens on different time scales and how we use abstract high-level actions to plan. I’m not sure whether or how world models do this, but I think it’s important to explore.

Data collection consisted of having the JetBot turn on and off its left motor for a pre-specified number of intervals of random length. I would then move the Kong and the process started again [data collection notebook]. This was the most labor intensive part of the project. I started thinking about how to automate data collection for training world models and it made me think of the idea of motor babbling in babies. The idea of using mispredictions of the world model to guide exploration and data collection also seems right to me. These sorts of ideas fall into a research area called developmental robotics, which I’d like to understand better.

I was using an RTX 3070 graphics card for most of the training, which is about 5 years old. This meant training on the entire dataset took a while, and I would essentially start over if I wanted to add new data. Intuitively it seems like there should be a way to learn continuously as data comes in and the fields of online and lifelong learning tackle this problem. I started to setup experiments with incremental training on new data, but didn’t get too far and I would like to try it in my next project.

Next Steps

My goal was to apply a DIAMOND style model to robotics. The task was to have a JetBot learn a world model and use it to control a single motor to center a Kong in its view. I was able to train a world model [training notebook] and had some early glimpses of success with the task, but it only worked some of the time. Despite the limited success, I was continuing to see better performance by collecting more data in regions it wasn’t doing well. I decided this was a good stopping point: the remaining work seemed straightforward, and fully solving the task offered limited benefits to my next directions.

[jetbot model predictive control notebook]

I recently got a robot arm from Vassar Robotics so I plan to continue working on world models and the problems I mentioned above with this new platform.